Authors: Emmanuele Tidoni, Henning Holle, Michele Scandola, Igor Schindler, Loron Hill, Emily S. Cross

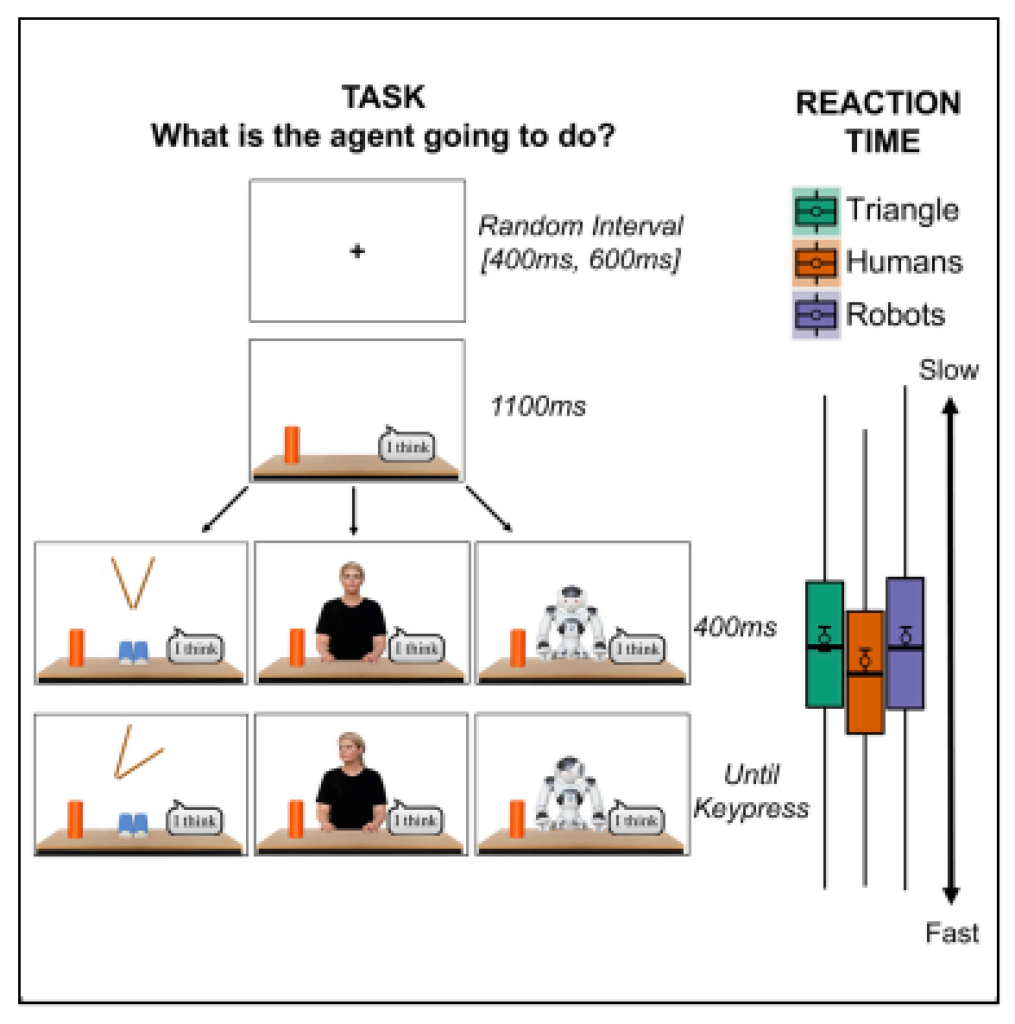

Do people ascribe intentions to humanoid robots as they would to humans or non-human-like animated objects? In six experiments, we compared people’s ability to extract non-mentalistic (i.e., where an agent is looking) and mentalistic (i.e., what an agent is looking at; what an agent is going to do) information from gaze and directional cues performed by humans, human-like robots, and a non-human-like object. People were faster to infer the mental content of human agents compared to robotic agents. Furthermore, although the absence of differences in control conditions rules out the use of non-mentalizing strategies, the human-like appearance of non-human agents may engage mentalizing processes to solve the task. Overall, results suggest that human-like robotic actions may be processed differently from humans’ and objects’ behavior. These findings inform our understanding of the relevance of an object’s physical features in triggering mentalizing abilities and its relevance for human–robot interaction.